So I finished a new book a week or so back, and since I’m not really using the hideous mess that twitter has become, I thought people might be interested in the overall argument. The book is off under review just now, but I gave a talk about some of the ideas both at GLOW and more recently at QMUL. The QMUL slides are here.

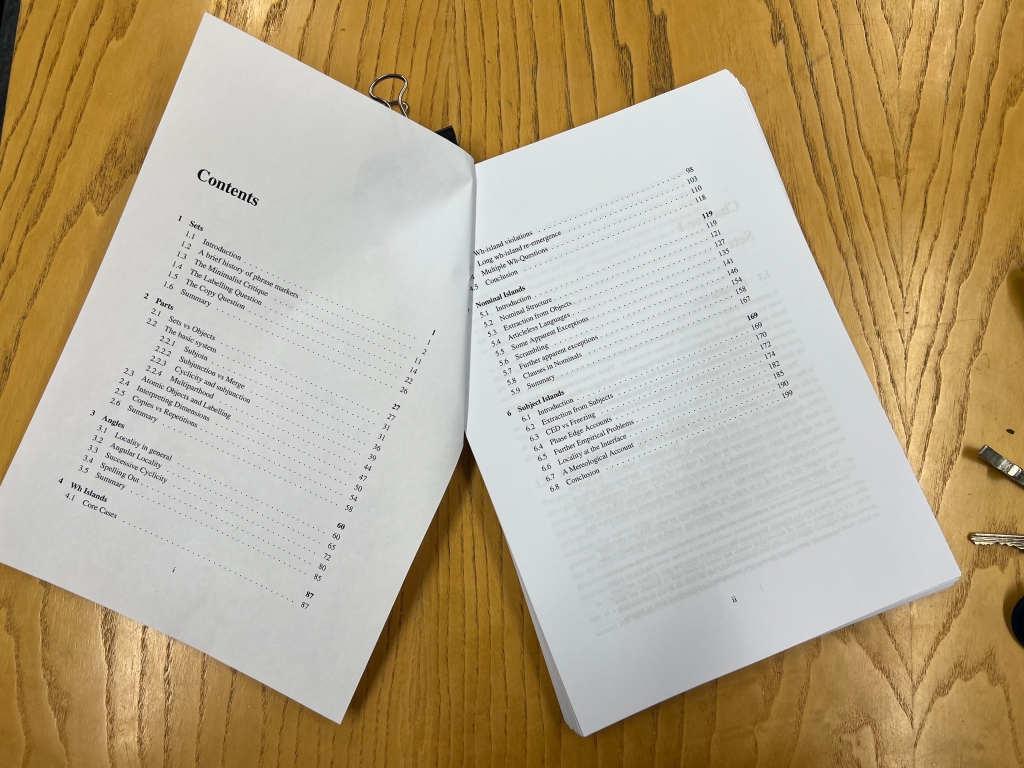

The argument of the book starts from some history. Chomsky’s 1955 magnum opus, The Logical Structure of Linguistic Theory, delineated a view of syntactic objects as sets of strings. I trace the arguments about the nature of syntactic objects through the 1970s and 1980s, focussing on Stowell’s development of the X-bar theoretic view, the reasons why order was removed from the theory, and then the minimalist critique that Chomsky developed of the X-bar theoretic perspective in the early 1990s, his introduction of the operation Merge, and various changes of perspective about what Merge does, and why it does it since. I then focus on two shortcomings of that model. The first is that when Merge builds a set-theoretic object, that new object needs to be categorized (have a label) and that requires a new sub-theory to do the labelling. The second is that the way that Merge models long distance dependencies is via the construction of `copies’ of syntactic objects. But these copies also require a special sub-theory to distinguish them from items that are formally identical, but not constructed via Merge operating long distance. I argue it would be better to have a system that didn’t require these extra sub-theories.

I then develop a new system based on the fundamental idea that syntactic objects are not set-theoretic but rather they are mereological. Usually, in linguistics, classical extensional mereology is appealed to in the analysis of mass terms, plurals, imperfective aspect etc., and its attractiveness in these domains is because it provides a way of modelling undifferentiated parthood. However, syntactic objects are clearly highly differentiated, so classical mereology is insufficient. Instead I introduce the notion of a hylomorphic pluralistic mereological system from the work of philosophers like Fine and Koslicki, and argue that this is a better model for phrase structure than sets. Hylomorphic mereologies take objects to consist not just of their parts, but also of the way that their parts are composed, and pluralistic mereologies allow parts to be part of objects in different ways (what I call dimensions of parthood). This provides the required differentiation. The core notion of mereology is the notion (proper) part, which is an Irreflexive Transitive relation (compared to set-membership, which is an Irreflexive Intransitive relation). I therefore define an alternative to Merge, which I call Subjoin, which takes two objects and makes one of them part of the other. I also show how the point in a derivation can be used to differentiate different `dimensions’ of parthood. Subjoin provides the way that parts are parts of their whole, and dimensions provide the different `flavours’ of that combinatory mechanism. I suggest that the dimensions distinguish extended projection complement relations from specifier relations. This new system, it turns out, is no more complex than the set theoretic system, but it needs no special subtheory for labels (since, when you make an object part of another object, nothing changes in terms of the labels). It also derives syntactic objects where a single object can be part of multiple other objects, rather than requiring copies, so the system is a multidominance (or rather multiparthood) system, meaning that it is straightforward to distinguish `copies’ from non-copies. The chapter concludes that the new system is at least as simple as the standard set theoretic system, but an improvement in that it suffers neither from the labelling nor from the copy problems.

I then turn to the implications of the mereological approach for theories of locality. I show how a simple extension of the most local relations between objects in the system provides a theory of locality that answers four questions which are not properly answered by the standard system: why are there locality domains? Why are they what they are? Why are there exceptions to locality domains? Why are these what they are? In the mereological theory of syntactic objects, there are locality domains because the part relation is Transitive, but Dimensionality blocks that relation. This means that some syntactic objects are parts of another object, but they are not part of that object in a consistent dimension. If syntax `sees’ parts only via dimensions, then those ‘disconnected’ parts are invisible to it. The domains are what they are because of the two dimensions of syntax: essentially the innards of specifiers are `invisible’ from outside the specifier, so specifiers are locality domains. There are exceptions to those domains because of Transitivity. If something is a specifier of a specifier of an object, then Transitivity implies it is a specifier of that object. But then it is local to that object, which makes the `edges’ of specifiers transparent, while the insides of specifiers are opaque. This also answers the final question as to why the exceptions are what they are. With this theory in place, I define a locality principle (Angular Locality) which ensures the results just discussed, and show how, as a side effect, it also rules out super-local dependencies (such as one between a complement and a specifier of a category), and super-non-local dependencies (the equivalent in this system of parallel Merge and Sidewards Movement). I illustrate how the system works through an analysis of Gaelic wh-extraction, including new data about the interaction of the spellout of an object in a position to which it has subjoined, vs spellout of the object to which it has subjoined. The system also extends elegantly to partial-wh-movement constructions and clausal pied-piping as a means of expressing long-distance phrase-structural dependency. I also examine how the system maps from mereological structures, which have only hierarchical dimensionality, to linear structures which are required for most kinds of externalization of syntax (pronunciation or signing), adopting a Svenonius/Ramchand style approach to lexicaliztation of extended projections, and propose a way of essentially extending that to internally subjoined objects (moved constituents).

The bulk of the rest of the argument focusses on how the system plays out in the domain of islands, one of the most central topics in syntax since the 1960s. I first show how the system predicts wh-islands: because of the interaction between Transitivity and Dimensionality, if a syntactic object has something subjoined to it, it is impossible to subjoin something else to it. But the result that emerged from the previous chapter showed that the only possible means that an object can subjoin from inside a specifier is through that specifiers own specifier (because of the way that Transitivity works). This means that wh-islands (almost) follow from the system. The standard Bare Phrase Structure system does not have this consequence, since it allows multiple specifiers, and so has multiple positions at phase edges. This is why in the standard system, wh-islands are usually explained via minimality effects rather than phase theory. In the mereological system, they emerge through the theory of phrase structure itself. Over the past few decades, a number of exceptions have been discovered to the wh-Island effect (especially Scandinavian languages, but also Hebrew, Slovenian, some varieties of English, etc.). I adopt a perspective here based on the idea that the wh-expression is not actually at the edge, and that these languages allow a further category, which provides an extra specifier. One might think that this robs the theory of empirical bite; however, I show that it predicts a new effect, which I call the Wh-Island Re-Emergence (WIRE) effect, which is unexpected in the standard system. This effect arises because, although it is possible to subjoin an element outside of a specifier, it is not possible to extract two elements from the same specifier, because of the way that Transitivity and Dimensionality interact. I show how the WIRE effect can be detected in Norwegian, Danish, Hebrew and English. This consequence of the theory (that it is not possible to extract two elements from a single constituent) would seem to be falsified by languages, like Bulgarian, which allow multiple wh-movement, but I proffer a new analysis of this phenomenon, what I call a declustering approach, which explains not only why it is possible, but also why in such constructions only absorbed readings of the wh-quantifier are possible. I also show how the prediction, that in these languages the WIRE effect should not appear, is correct.

One of the consequences of the system is that there’s no real geometrical difference between subjects and objects, but there is a tradition in generative syntax that says that objects are more easy to extract from than subjects. I try to argue that this is wrong.

I do this by first examining nominal island effects where the nominal is in object position, both those involving extraction from prepositional complements to nouns, and clausal complements to nouns, and I argue that these all boil down to specificity effects, which, in the mereological system, emerge in the same way as wh-island effects do: an internal part of the nominal subjoins to the object which constitutes the nominal as a whole, and, by Dimensionality, blocks any further subjunction from below it. I argue that the proposal I made in my 2013 book for nominal structure should be adopted. This is the idea that apparent complements to nouns are actually introduced by functional structure which is relatively high in the noun phrase. I extend to clausal complements my previous work on adpositional phrases and show that, once this perspective is adopted, definiteness can be understood as involving subjunction to the category D. Indefinites do not involve this subjunction, and so it is predicted by the system that extraction (subjunction) is possible from indefinites; definites and specifics, in contrast, have something subjoined to D, blocking extraction and deriving Fiengo and Higginbotham’s 1981 Specificity Condition. I then extend the analysis to wh-in-situ cases like Persian and Mandarin, and how how the same effect emerges. I adduce further evidence from Mandarin that subjunction of any constituent to D gives rise to specificity effects and concomitant blocking of extraction. With this in hand, I then show how the proposals extend to Slavic languages, and provide an alternative to the widespread NP analysis of these languages. I argue that the mereological approach is an improvement over the NP analysis of these languages, as it makes a more precise prediction about the collocation of overt articles and extraction, and I appeal to data from Mayan, Bulgarian, and Austronesian languages that backs up this direction of analysis. The system also extends to some long-standing problems from the Specificity Condition, such as the Clitic Gate phenomenon in Galician and the Ezafe construction in Persian. The core difference between the systems is that in the mereological approach definiteness is syntactic, while in the Specificity Condition approaches it is semantic. This chapter also extends the theory to the extraction effects found in Scrambling constructions in Germanic, showing that classical analyses based on Huang’s Condition on Extraction Domains constraint or using the Freezing Condition, cannot be correct, and syntactic definiteness captures the empirical patterns more effectively.

I argue that the differences between weak and strong definite articles in Germanic can be understood as whether a Det category subjoins to D or not. I show how this correlates with extraction and then extend the idea to a new approach to the classical paradigm in English, where the definite article shows a weaker effect than Possessors or Demonstratives. I argue that one way of thinking about this is in terms of markedness (where by markedness I mean accessibility a particular category by the parser in the moment/context). In English, the definite determiner the is actually ambiguous, one instance being equivalent to strong articles in German, and the other equivalent to weak articles. The former obligatorily subjoins to D, the latter does not, so only the latter is compatible with extraction. The idea then is that the weak version is marked in English, so the judgement of acceptability is essentially a judgment of markedness (which I conceptualize as ease of accessibility to the parser): extraction is grammatical, but requires a weak definite parse of the definite article, and that is marked in English. I also show how this idea of weak and strong definiteness can explain a number of apparent exceptions to the Specificity Condition, such as with process nouns and superlatives, and how it can handle long-standing problems such as extraction from definite nouns that are complements of verbs of creation. Finally I show how this system extends to the classical Complex Noun Phrase Constraint (CNPC) phenomena and the exceptions to the CNPC noted by Ross in 1967, drawing into the analysis recent work on Danish which makes morphological and semantic distinctions that follow from the overall analysis. Overall the core point of the chapter is that extraction from noun phrases is fundamentally regulated by whether the noun phrase has part of it subjoined to itself, a configuration which can be detected by how it is both pronounced and interpreted.

Finally I turn to subjects. Since subjects and objects are not geometrically distinct in mereological syntax (both are specifiers, or, more technically in the system, they are 2-parts, which is a kind of transitive notion of specifierhood), the system cannot appeal to CED type factors to explain why subjects are more difficult to extract from than objects. Given that it is effectively multidominant, the system also cannot appeal to Freezing type explanations, since these rely on chain links with distinct properties. This means that subject islands also must, essentially, be specificity islands, and I show how, with a careful evaluation of the data, this turns out to be correct. In the chapter I draw on a great deal of experimental work on Subject Islands much of which emerged in an attempt to test the kinds of predictions that CED and Freezing accounts predicts, but also the predictions of Chomsky’s On Phases system, which blocks extraction from a phasal edge, but allows it to take place in parallel to both subject position and wh-scope position. I review experimental work on German, English, Russian and Hungarian, languages which have been argued to have aspects of their syntax which allow the predictions of these different accounts to be tested. I also consider the difference between pied-piped and stranded cases. In addition to the experimental work, I gather evidence from English, French, Spanish, and Greek showing that none of these accounts capture the right generalizations. I also look at more recent approaches, including those that appeal to the information-structure syntax interface, and extend some ideas that were first proposed by Bianchi and Chesi, who argue that the traditional distinctions of the On Phases theory (basically the differences between transitive, unergative and unaccusative predicates) are insufficient. I argue that this approach is on the right lines but that a mereological interpretation of it has a number of advantages, and, of course, since that system cannot appeal to CED or Freezing type accounts, it has the advantage of ruling these out as possible analyses available to the language acquirer. To capture the empirical effects, I propose that English (for example) has two categories which host surface subjects (two T categories): one of these is similar to the scrambling clausal category I proposed for German, and the other is similar to the classical analysis of English T (that it bears an EPP feature). I suggest that the former is correlated with a individual topic interpretation (a categorical construal), while the latter is interpreted as an event/stage topic interpretation (a thetic construal), providing a different implementation of Bianchi and Chesi’s ideas within the mereological system. The theory correctly predicts that it is impossible to extract from subjects that are construed as topics, but possible to extract from subjects that are not. Just like I argued for definites in English, I take there to be a markedness effect with these two T categories: it is less marked to construe the subject as a topic (i.e. to use the T associated with this interpretation) so parses of extractions from subjects must involve the more marked T, and that is why the experimental data tends to show there is an effect. I show how this accounts for the experimental data discussed earlier in the chapter, and extend it to the case of Hungarian, where I argue that Hungarian topics, which seem to be transparent to extraction just in certain cases, are actually always transparent to extraction because they bear the feature that checks topicality on D rather than on a lower category that subjoins to D (making Hungarian parallel to Persian in some respects). I briefly conclude the chapter with a discussion of relative clauses, another island effect that has been tied down to CED effects, and, appealing to recent work by Sichel on Hebrew, I show how they too involve structures which have a `free’ edge to the D category that ends up being filled in situations where they are specific or topical.

Further Consequences and Extensions

The system I develop has a number of advantages over the currently available system of phrase structure in minimalist generative linguistics. It is theoretically an improvement, as it doesn’t require extra sub-theories to deal with labelling or the copy/repetition problem; further, it leads to a theory of locality that, I think, explains why syntactic domains are local, and why the locality domains are what they are. It also has some empirical advantages, in that it predicts the WIRE effect, and its interaction with multiple wh-movement, and it captures the right lay of the empirical land in terms of extraction from nominal phrases in both subject and object positions. However, the book leaves a great deal still to do: there are two other areas of `locality’ in syntax that it doesn’t touch on. One is Williams Cycle (or Generalised Improper Movement) effects, where cross clausal dependencies seem never to target a position in the embedding clause that is lower than the position where the dependency is rooted in the embedded clause. The Williams Cycle issue is connected with the question of clause size in general, and how the system is to deal with embedded clauses that are not full CPs. The classical analysis of, for example, raising or ECM clauses is that they must be complements of the selecting verb, and they certainly seem to allow multiple extractions to take place from them, which would be ruled out in the mereological theory if they are specifiers. This means they must be complements, and then the issue becomes exactly what this implies for the interpretation of the two different types of part relation that Dimensionality derives. It also raises questions about how to deal with hyper-raising of the Zulu type (as opposed to the P’urhépecha type). The question of improper movement may, as argued in recent work by Keine and, in computational terms, by Graf, be a side effect of the kinds of head/features that are involved, and the feature theory assumed in the book is very simple, amounting to more or less the Minimalist Grammar feature theory of Stabler and others. This is an area for further development.

The second locality area is Minimality Effects of the type that Rizzi pointed out in his 1990 book. So far the system I’ve developed does not need to appeal to these. Wh-Islands are tackled via phrase structure, there is no head movement in the system because the kinds of syntactic objects it generates are headless (what Brody 2000 called `telescoped’), and, as mentioned above, A-movement Relativize Minimality effects may well depend on what the phrase structure of raising and ECM verbs is. However, there are many other cases where Relativized Minimality, or similar constaints, appear to hold (for example Beck Effects), and these currently fall outside the system.